When you create a WordPress blog or website, a robots.txt file is automatically created for each of your landing pages and posts. This is an important aspect of your website SEO as it will be used by search engines when crawling your website content.

If you want to take your site SEO to the next level, optimizing the robots.txt file on your WordPress site is important, but unfortunately not as simple as adding keywords to your content. That’s why we’ve put together this guide to the WordPress robots.txt file so you can start tweaking it and improving your search rankings.

What is a Robots.txt file?

By placing websites on search engine results pages (SERPs), search engines like Google “crawl” the website pages and analyze their content. Any website’s robots.txt file tells crawler “bots” which pages to crawl and which not, essentially a form of Robotic Process Automation (RPA).

You can view the robots.txt file for any website by typing /robots.txt after the domain name.

User-agent:

The user agent in a robots.txt file is the search engine by which the robots.txt file should be read. In the example above, the user agent is marked with an asterisk, which means that it applies to all search engines.

Most sites are happy when all search engines crawl their sites, but sometimes you may want to block all search engines except Google from crawling your site or provide specific instructions on how search engines like Google News or Google Images crawl your site.

In that case, you need to find out the user agent ID from the search engines that you want to instruct. This is pretty simple to find online, but here are some of the main ones:

- Google: Googlebot

- Google News: Googlebot-News

- Google Images: Googlebot-Image

- Google Video: Googlebot-Video

- Bing: Bingbot

- Yahoo: Slurp Bot

Allow and Disallow:

In robots.txt files, allow and disallow tell bots which pages and content they can and cannot crawl. If, as mentioned above, you want to block all search engines except Google from crawling your site, you can use the following robots.txt file:

The slash (/) after “Disallow” and “Allow” tells the bot whether or not it has permission to crawl all pages. You can also place specific pages between the bars to allow or prevent the bot from crawling them.

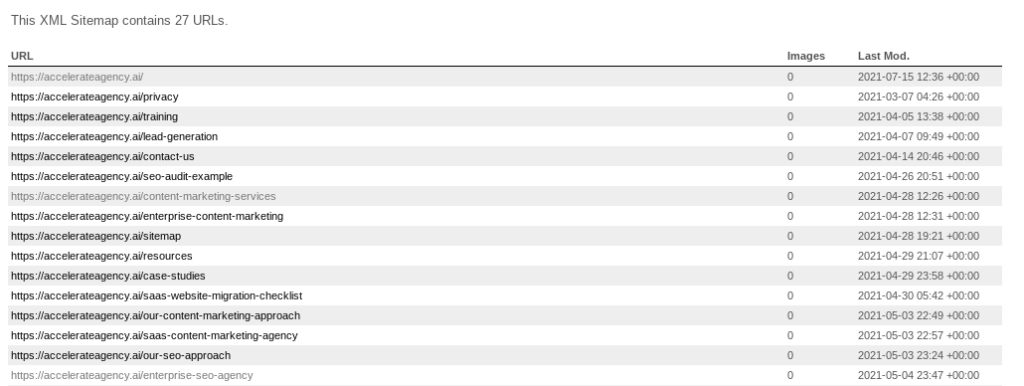

Sitemap:

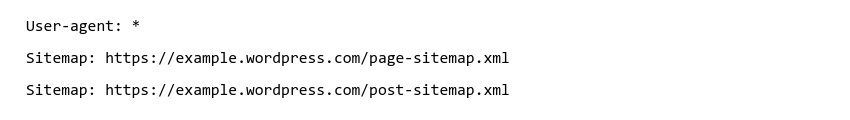

The “sitemap” in a robots.txt file is an XML file that contains a list and details of all the pages on your website. It looks like this:

The sitemap contains all the web pages that you want the bot to discover. The sitemap is especially useful if you have web pages that you want to appear in search results, but they are not typical landing pages, such as blog posts.

Sitemaps are especially important for WordPress users who want to energize their sites with blog posts and category pages. Many of them may not show up in the SERPs if they don’t have their own robots.txt sitemap.

These are the main aspects of a robots.txt file. However, it should be noted that your robots.txt file is not a foolproof way to prevent search engine robots from crawling certain pages. For example, if another site uses anchor text to link to a page that you “rejected” in your robots.txt file, search engine robots will still be able to crawl that page.

Do You Need a Robots.txt File on WordPress?

If you have a WordPress website or blog, you will already have an automatically generated robots.txt file. Here are some reasons why it’s important to consider your robots.txt file if you want to protect an SEO-friendly WordPress site.

You Can Optimize Your Crawl Budget

A crawl budget, or crawl quota, is the number of pages that search engine robots will crawl on your site on any given day. If you don’t have an optimized robots.txt file, you could be wasting your crawl budget and preventing bots from crawling the pages on your site that you want to appear first in the SERPs.

If you sell products or services through your WordPress site, the crawler bots should ideally prioritize the pages with the best sales conversion.

You Can Prioritize Your Important Landing Pages

By optimizing your robots.txt file, you can ensure that the landing pages you want to appear first in the SERPs are quick and easy for crawlers to find. Dividing your site’s index into an index of “pages” and “posts” is especially useful for this, as it can make sure your blog posts appear in the SERPs rather than just your default landing pages.

For example, if your site has many pages, and customer data shows that blog posts are generating a lot of purchases, you can use sitemaps in the robots.txt file to ensure that blog posts appear in the SERPs.

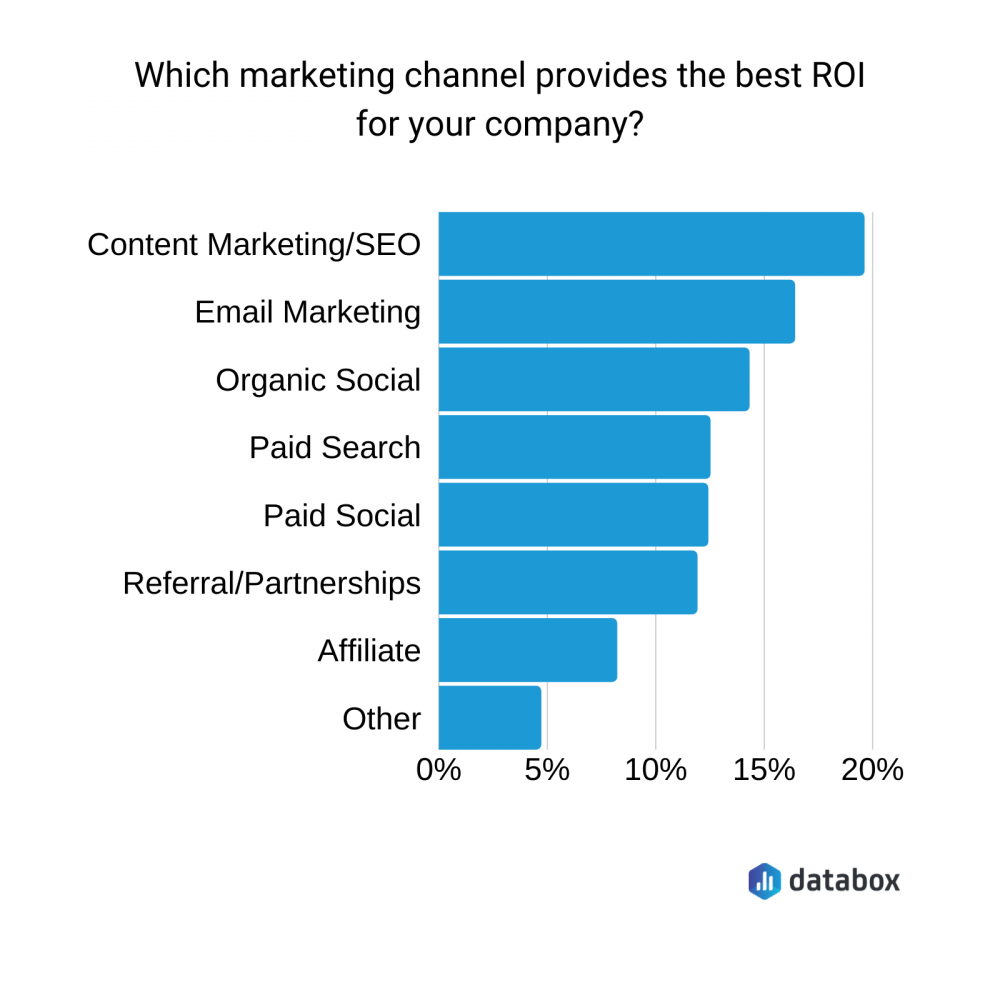

You Can Improve the Overall SEO Quality of Your Website

Marketers are well aware of the great return on investment of search engine optimization. Funneling organic searches to your SEO-focused site is cheaper and generally more effective than paid ads and affiliate links, although both are still useful. Check out these statistics to find out the ROI of your marketing channel.

Optimizing your robots.txt file is not the only way to improve the search ranking of your website or blog. You will still need to have SEO-compliant content on the pages themselves, which may require the help of an SEO SaaS provider. However, editing your robots.txt file is something you can easily do yourself.

How to Edit a Robots.txt File on WordPress

If you want to edit your robots.txt file in WordPress, there are several ways to do it. The best and easiest option is to add a plugin to your content management system: the WordPress dashboard.

Add an SEO Plugin to Your WordPress:

This is the easiest way to edit your WordPress robots.txt file. There are many good SEO plugins that allow you to edit the robots.txt file. Some of the most popular is Yoast, Rank Math, and All In One SEO.

Add a Robots.txt Plugin to Your WordPress:

There are also WordPress plugins specifically designed to edit your robots.txt file. Popular robots.txt plugins are Virtual Robots.txt, WordPress Robots.txt Optimization, and Robots.txt Editor.

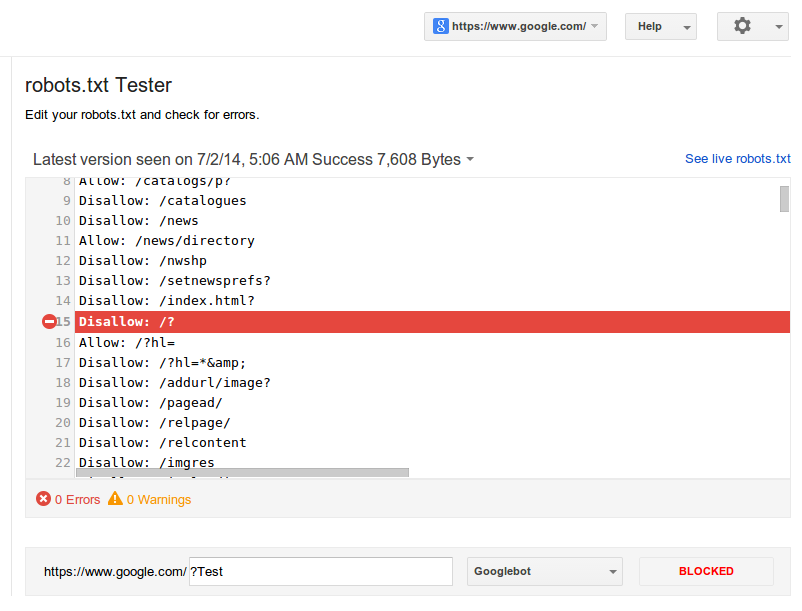

How to Test Your WordPress Robots.txt File

If you’ve edited your robots.txt file, it’s important to test it to make sure you haven’t made any mistakes. Errors in your robots.txt file can cause your site to be completely banned from the SERPs.

Google Webmaster has a robots.txt testing tool that you can use for free to test your file. To use it, just add your homepage URL. The robots.txt file will appear and you will see “syntax warning” and “logical error” on each line of the file that does not work.

You can then go to a specific page on your site and select a user agent to run a test that will show whether the page is “accepted” or “blocked.” You can edit your robots.txt file in the test tool and run the test again if necessary, but note that this will not change your actual file, you will need to copy and paste the edited information into your robots.txt editor and save it. . there.

How to Optimize Your WordPress Robots.txt File for SEO

The easiest way to optimize your robots.txt file is to select the pages you want to disallow. On WordPress, typical pages that you might disallow are /wp-admin/, /wp-content/plugins/, /readme.html, /trackback/.

For example, a SaaS marketing provider has many different pages and posts on their WordPress site. By disallowing pages like / wp-admin / and / wp-content / plugins /, they can ensure that the crawling bots prioritize the pages they rate.

Create Sitemaps and Add Them to Your Robots.txt File

WordPress creates its own generic sitemap when you create a blog or website with it. It can usually be found at example.wordpress.com/sitemap.xml. If you want to customize your sitemap and create additional sitemaps, you must use a robots.txt file or a WordPress SEO plugin.

You can access your plugin from the WordPress dashboard and it should have a section to enable and edit your sitemap. Good plugins will allow you to easily create and customize additional sitemaps, such as a “pages” sitemap and a “posts” sitemap.

Once the sitemaps are set up, just add them to your robots.txt file as follows:

In this guide, we have provided you with everything you need to know about WordPress robots.txt files. From explaining what robots.txt files are to delving into why and how you should optimize your robots.txt file for SEO, this article will help you if you want to find simple and effective ways to improve your WordPress site’s search ranking.